Blog

April 28, 2022

Kafka Connect is an integral tool leveraged by the Apache Kafka ecosystem to reliably move data with scalability and reusability. Because of the Kafka Connect architecture and features, organizations can connect Kafka to the disparate data sources and storage destinations that comprise enterprise data warehouses.

This blog provides a detailed introduction to the Kafka Connect architecture, with an explanation of how Kafka Connect works, its components, common use cases, and how it functions in Kubernetes.

What Is Kafka Connect?

Kafka Connect provides a platform to reliably stream data to/from Apache Kafka and external data sources/destinations.

Apache Kafka is an impressive piece of technology, but what it does with the data traversing the cluster is where the magic really happens. Where is the data coming from? Where is the data going to, and what happens to it along the way? The answer to these questions is Kafka Connect and the unique Kafka Connect architecture.

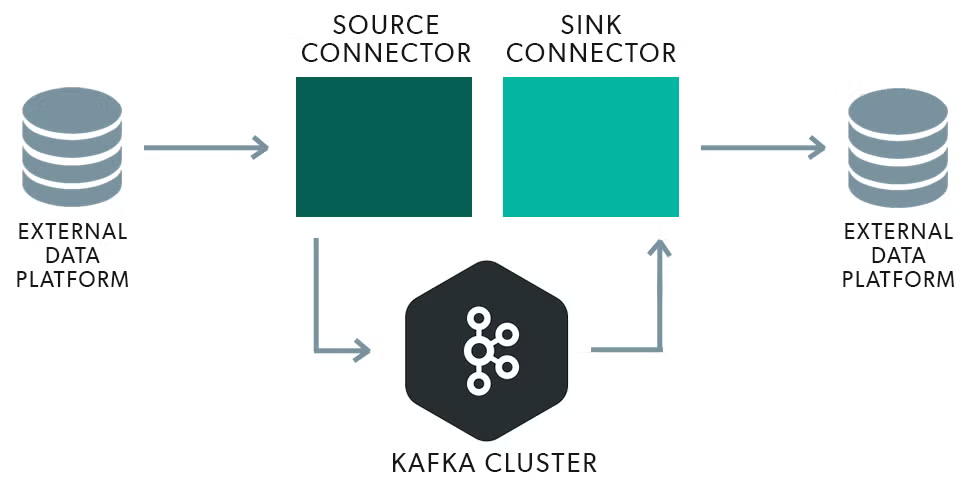

With its core concepts of Source and Sink connectors, Kafka Connect is an open source project that provides a centralized hub for basic data integration between data platforms such as databases, index engines, file stores, and key-value repositories. Kafka Connect provides a pluggable solution to integrate these data platforms together by allowing developers to share and reuse connectors.

Back to top

Back to top

Kafka Connect Architecture and Kafka Connectors

At the core of the Kafka Connect architecture are the connectors. Kafka Connectors are ready-made components that allow for defining date sources and destinations for external systems. Separated out between Source Connectors and Sink Connectors, existing connectors can be used for common data sources or sinks or, new connectors can be developed for more uncommon external systems.

There are some Confluent proprietary connectors in the Kafka Connect architecture, but most popular data platforms, such as Elastic Search, S3, MongoDB, MySQL, and Redis, have an existing connector and are community-maintained. These connectors can usually be found on the project’s Git repo or website. You can find most connectors simply by searching for the project name followed by "Kafka connector." For instance, here is the Kafka connector for MongoDB.

Kafka Source Connectors

A Source Connector defines what data systems to collect data from, which can be a database, real-time data streams, message brokers or application metrics. Once defined, the Source Connector connects to the source data platform and makes the data accessible to Kafka topics for stream processing.

Kafka Sink Connectors

A Sink Connector, on the other hand, defines the destination data platform or the data’s endpoint. Again these endpoints could be any number of data platforms such as index engines, other databases, or files stores.

Back to topHow Does Kafka Connect Work?

Kafka Connect works by implementing the Kafka Connect API. To implement a connector, one must provide a sinkConnector or sourceConnector and then implement sourceTask or sinkTask in the connector code. These define a connector’s task implementation as well as its parameters, connection details, and Kafka topic information.

Once these are configured, Kafka Connect manages these tasks, removing the need to manage task instances and implementation.

Back to topNeed Support for Your Kafka Deployments?

OpenLogic has a wealth of experience integrating, configuring, optimizing, and supporting Kafka in enterprise applications. Talk to an expert today or download our enterprise guide to get started.

Talk to a Kafka Expert

Kafka Connect Use Cases

Some common use cases for Kafka Connect include ingesting application metrics from multiple sources into a single data lake, collecting click-stream activity across multiple web platforms into a single activity view, or just simply moving data from one database to another. In all use cases, Kafka Connect provides a platform to reuse connectors across the entire enterprise.

Another popular use case for Kafka Connect is the role it plays in data warehouse schema registry. Kafka Connect converters can be used to collect schema information from different connectors and convert data types into standardized data formats such as Protobuf, Avro, or JSon Schema. Converters are separated out from the connectors themselves so they can be reused amongst multiple connectors. For instance, you could implement the same converter across an HDFS sink as well as an S3 sink.

Keep in mind, however, that while many Kafka use cases appear to be very similar to traditional ETL use cases, developers and data engineers should use caution when attempting to do any complicated data transformation with Kafka Connect.

Single Message Transformation or SMT is a supported function in Kafka Connect, however it does come at a cost. The true power of the Kafka platform is its ability to move massive amounts of data at break-neck speeds and injecting complicated data transformations into the platform can potentially cripple this design. While simple SMTs are supported, it's generally considered best practice to perform more complicated data transformation before ingesting the data into Kafka Connect.

Back to topKafka Connect in Kubernetes

A great way to implement Kafka Connect is through the use of containers and Kubernetes. The Kafka Operator Strimzi is an amazing project that allows for rapidly deploying and managing your Kafka Connect infrastructure. This provides the ability to define Kafka Connect images with customs resources and deploy them rapidly, which makes managing your Kafka Connect cluster a breeze. Using a standardized description language across all your connectors implementation presents a huge degree of process reusability and standardization.

Watch this video to learn more:

When deploying Kafka on Kubernetes with Strimzi, you can define, download, and implement Source and Sink Connectors from repos like Maven Central, Git Hub, or other custom defined artifact repositories, and let Kubernetes handle their deployment. Strimzi allows you to build Kafka Connect images with a build configuration that contains a list of Connector plugins and the connector configurations into a CustomeResourceDefiniton and deploy those CRDs across your K8s infrastructure.

Final Thoughts

Apache Kafka is arguably the most robust, scalable, and highest performing message streaming platform on the market, capable of handling millions of messages per second. Almost every Kafka implementation would benefit from integrating Kafka Connect into the environment — and with tools like the Stimzi operator as a force multiplier, the power of Kafka Connect to transform your data operations is even more powerful.

Additional Resources

- Guide: Enterprise Kafka Resources

- Blog - Solving Complex Kafka Issues: Enterprise Case Studies

- Blog - Kafka MirrorMaker Overview

- Blog - Kafka Partition Strategies

- Case Study - Credit Card Processing Company Avoids Kafka Exploit

- White Paper - The New Stack: Cassandra, Kafka, and Spark

- Blog - 5 Apache Kafka Security Best Practices

- Blog - Kafka vs. RabbitMQ

- Blog - Kafka Streams vs. Flink