Blog

March 5, 2025

ZooKeeper was completely removed in the last major Apache Kafka release (Kafka 4), and replaced by Kafka Raft, or KRaft, mode. Though many developers were excited about this change, it does majorly impact teams currently running Kafka with ZooKeeper who need to determine an upgrade path now that ZooKeeper is no longer supported.

In this blog, our expert explains what KRaft mode is, the difference between KRaft vs. ZooKeeper deployments, what to consider when planning your KRaft migration, and how your environment will look different when you're running Kafka without ZooKeeper.

What Is KRaft Mode?

Kafka Raft (which loosely stands for Reliable, Replicated, Redundant, And Fault Tolerant) or KRaft, is Kafka’s implementation of the Raft consensus algorithm.

Created as an alternative to the Paxos family of algorithms, the Raft Consensus protocol is meant to be a simpler consensus implementation with the goal of being easier to understand than Paxos. Both Paxos and Raft operate in similar manner under normal stable operating conditions, and both protocols accomplish the following:

- Leader writes operation to its log and requests following servers to do the same thing

- The operation is marked as “commited” once a majority of servers acknowledge the operation

This results in a consensus-based change to the state machine, or in this specific case, the Kafka cluster.

The main difference between Raft and Paxos, however, is when operations are not normal and new leader must be elected. Both algorithms will guarantee that the new leader’s log will contain the most up-to-date commits, but how they accomplish this process differs.

In Paxos, the leader election contains not only the proposal and subsequent vote, but also must contain any missing log entries the candidate is missing. Followers in Paxos implementations can vote for any candidate and once the candidate is elected as leader, the new leader will utilize this data to update its log to maintain currency.

In Raft, on the other hand, followers will only vote for a candidate if the candidate’s log is the at least as up to date as the follower’s log. This means only the most up-to-date candidate will be elected as leader. Ultimately, both protocols are remarkably similar in their approach to solving the consensus problem. However, with Raft making some base assumptions about the data, namely the order of commits in the log, we can see improvements in election efficiency in Raft.

What does this mean in regards to Kafka? From the protocol side of things, not much. ZooKeeper utilizes a proprietary consensus protocol called ZAB (ZooKeeper Atomic Broadcast) that is much more focused on total ordering of commits to the change log. This focus on commit order makes Raft consensus fit quite well into the Kafka ecosystem.

That said, changes from an infrastructure perspective will be quite noticeable. With each broker having the Kraft logic incorporated into the base code, ZooKeeper nodes will no longer be part of the Kafka infrastructure. Note that this doesn’t necessarily mean less servers in the production environment — more on that later.

Don't Rush Your Kafka 4 Migration. Get Kafka LTS.

OpenLogic offers long-term support for Kafka 3.8 and 3.9 for teams who need to delay their migration to Kafka 4. Protect your streaming data pipeline through 2028 with security patches and bug fixes and upgrade when you're ready.

Why Did KRaft Replace ZooKeeper?

To understand why the Kafka community leadership decided to make this move away from ZooKeeper, we can look directly at KIP-500 for their reasoning. In short, this move was meant to reduce complexity and handle cluster metadata in a more robust fashion. Removing the requirement for ZooKeeper means there is no longer a need to deploy two distinctly different distributed systems. ZooKeeper has different deployment patterns, management tools, and configuration syntax when compared to Kafka. Unifying the functionality to single system will reduce configuration errors and overall operational complexity.

In addition to simpler operations, treating the metadata as its own event stream means that a single number, an offset, can be used to describe a cluster member's position and be quickly brought up to date. This in effect applies the same principles used for producers and consumers to the Kafka cluster members themselves.

Back to topZooKeeper vs. KRaft: Key Differences

In a ZooKeeper-based Kafka deployment, the cluster consists of several broker nodes and a quorum of ZooKeeper nodes. In this environment, each change to the cluster metadata is treated as an isolated event, with no relationship to previous or future events. When state changes are pushed out to the cluster from the cluster controller, a.k.a. the broker in charge of tracking/electing partition leadership, there is potential for some brokers to not receive all updates, or for stale updates to create race conditions as we’ve seen in some larger Kafka installations. Ultimately, these failure points have the potential to leave brokers in divergent states.

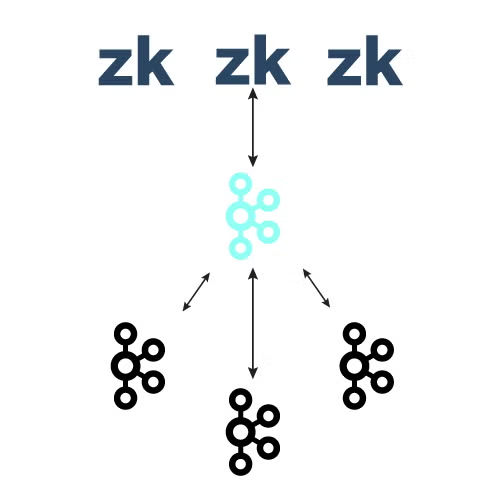

While not entirely accurate, as all broker nodes can (and do) talk to ZooKeeper, the image below is a basic example of what that looks like:

In contrast, the metadata in KRaft is stored within Kafka itself and ZooKeeper is effectively replaced by a quorum of Kafka controllers. The controller nodes comprise a raft quorum to elect the active controller that manages the metadata partition. This log contains everything that used to be found ZooKeeper: topic, partition, ISRs, configuration data, etc. will all be located in this metadata partition.

Using the Raft algorithm controller nodes will elect the leader without the use of an external system like ZooKeeper. The leader, or active controller, will be the partition leader for the metadata partition and will handle all RPCs from the brokers.

Learn about Kafka partitions

Get Kafka cluster configuration strategies

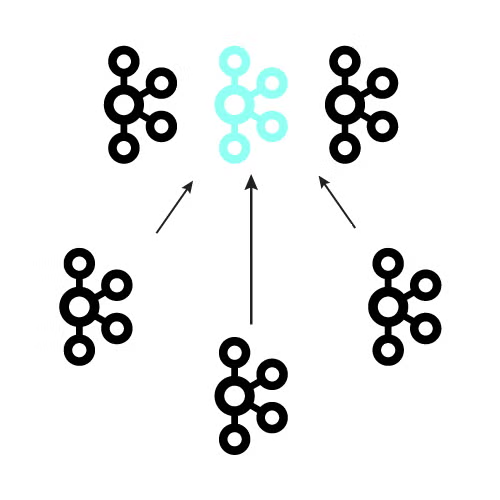

The diagram below is a logical representation of the new cluster environment implementation using KRaft:

Note in the diagram above there is no longer a double-sided arrow. This denotes another major difference in the two environments: Instead of the controller sending updates to the brokers, controllers fetch the metadata via a MetadataFetch API. In similar fashion to a regular fetch request, the broker will track the offset of the latest update it fetched, requesting only newer updates from the active controller persisting that metadata to disk for faster startup times.

In most cases, the broker will only need to request the deltas of the metadata log. However, in cases where no data exists on a broker or a broker is too far out of sync, a full metadata set can be shipped. A broker will periodically request metadata and this request will act as a heartbeat as well.

Previously, when a broker entered or exited a cluster, this was kept track of in ZooKeeper, but now the broker status will be registered directly with the active controller. In a post-ZooKeeper world, cluster membership and metadata updates are tightly coupled. Failure to receive metadata updates will result in eviction from the cluster.

Back to topZooKeeper Deprecation and Removal

KRaft has been considered “production ready” since Kafka 3.3 and ZooKeeper was officially deprecated in Kafka 3.5. It was removed completely with the release of Kafka 4.

KRaft Migration Considerations

As organizations plan their migrations to KRaft environments, there are quite a few things to consider. In a KRaft-based cluster, Kafka nodes can be run in one of three different modes know as process.role. The process.role can be set to broker, controller, or combined. In a production cluster, it is recommended that the combined process.role should be avoided — in other words, having dedicated JVM resources assigned to brokers and controllers. So, as mentioned previously, doing away with ZooKeeper doesn’t necessarily mean doing away with the compute resources in production. Using the combined process.role in develop or staging environments is perfectly acceptable.

Prior to the release of Kafka 4, several upgrades and changes to the KRaft implementation were completed and released, including:

- Configuring SCRAM users via the administrative API: With the completion and implementation of KIP-900 in Kafka 3.5.0 for inter-broker communications, the kafka-storage tool can be used as a mechanism to configure SCRAM.

- Supporting JBOD configurations with multiple storage directories: JBOD support was introduced in 3.7 as an early access feature. With the completion of KIP-858 and its implementation in 3.8, that is no longer the case.

- Modifying certain dynamic configurations on the standalone KRaft controller: In early releases of Kafka KRaft, some configuration items could not be updated dynamically, but as far as we are aware, these have mostly been fixed. The “missing features” verbiage should be removed with 4.0 (see conversation here).

- Delegation tokens: KIP-900 also paved the way for “delegation token” support. With the completion of KAFKA-15219 in 3.6, delegation tokens are now supported in KRaft mode.

Although a fully-fledged and supported upgrade path has been implemented and can be used to move clusters from ZooKeeper mode to KRaft mode, in-place upgrades generally should be avoided. At OpenLogic, we typically recommend lift-and-shift style, blue-green deployments instead. However, given the complexity of some Kafka clusters, having an official migration path is very much a welcome tool in the tool belt.

While detailing the KRaft migration process would require an entire series of blog posts, you can find an overview of the process in the Kafka documentation here. Of particular interest, though, is the requirement to upgrade to Kafka 3.9.0. The metadata version cannot be upgraded during the migration, so inter.broker.protocol.version must be at 3.9 prior to the migration. So, even if your organization isn’t planning on migrating to KRaft anytime soon, it still makes sense to plan your upgrade to 3.9 sooner rather than later.

Back to topGet the Decision Maker's Guide to Apache Kafka

This white paper has everything you need to master Kafka's complexity, from partition strategies and security best practices to tips for running Kafka on K8s.

KRaft Mode FAQ

What benefits would my organization see, if any, from migrating to KRaft?

The most basic benefit for any organization is of course being able to stay up to date on your software versions. With ZooKeeper removal on the horizon, staying updated in ZK mode will eventually be impossible. Also, organizations will see a decrease in cluster complexity as Kafka will handle metadata natively instead utilizing third-party software.

Lastly, organizations operating at the upper levels of cluster size will see a considerable increase in reliability and service continuity in KRaft mode. While ZooKeeper is a reliable coordination service for a myriad of open source projects, whenever our customers with extremely large clusters (i.e. 30/40+ brokers with thousands of partitions) are encountering severe issues, it often winds up being a ZooKeeper issue.

If we migrate from ZooKeeper to KRaft, can we decommission our dedicated ZK hardware?

Most likely, no, at least not in production. Production KRaft controllers should be deployed in dedicated controller mode, so they will need dedicated compute just like ZooKeeper in production does. However, non-production clusters can run in mixed mode.

We have “N” number of ZooKeeper nodes; how many KRaft controller nodes should we use?

At the very minimum organizations should deploy at least 3 controller nodes in production. The system requirements for ZooKeeper and controller nodes are quite similar, though, so deploying the same number of controller nodes is a reasonable place to start. Ultimately, a thorough load and performance testing protocol should be followed to validate this.

If we are running Kafka via Strimzi Kubernetes operator, can we start using KRaft?

Yes! However, be aware that as of version 0.45.0, there some limitations with controller. Currently, Strimzi continues to use static controller quorums, which means there are some limitations on using dynamic controller quorums. More information can be found in the Strimzi documentation here.

What if we are unable to migrate right away?

If you cannot migrate before your version of Kafka reaches end of life, Kafka long-term support gives you access to security patches until you are able to upgrade.

Back to topFinal Thoughts

For greenfield implementations, using KRaft should be a no-brainer, but for mature Kafka environments, migrating will be a complete rip and replace for your cluster, with all the complications that could follow. Creating a detailed migration plan, with blue/green deployment strategies, is crucial in such cases. And if your team is lacking in Kafka expertise, seeking out external support to guide your Kafka migration would also be a good idea.

This Blog Was Written By One of Our Kafka Experts.

OpenLogic Kafka experts can provide 24/7 technical support, consultations, migration/upgrade assistance, or even train your team.

Additional Resources

- Webinar - Kafka Gone Wrong: How to Avoid Data Disasters

- Blog - Introducing the Kafka Service Bundle

- Blog - Apache Kafka vs. Confluent Kafka

- Blog - Kafka 4: Changes and Upgrade Considerations

- Blog - Kafka 4.1: Enhancements in the Latest Release

- Guide - Enterprise Kafka Resources

- Blog - Kafka on Kubernetes with Strimzi

- Blog - 8 Apache Kafka Security Best Practices